Archive

Apache Helix internals

Follow @kishore_b_g

This is a follow up on the Cloudera blog post http://blog.cloudera.com/blog/2013/09/distributed-systems-get-simpler-with-apache-helix/ that covered the core concepts in Helix. I will get into some of the internals of Helix and how it responds to various cluster events.

Roles

Helix roles

Helix divides distributed system components into 3 logical roles as shown in the above figure. It’s important to note that these are just logical components and can be physically co-located within the same process. Each of these logical components have an embedded agent that interacts with other components via Zookeeper.

Controller: The controller is the core component in Helix and is the brain of the system. It hosts the state machine engine, runs the execution algorithm, and issues transitions against the distributed system.

Participant: Each node in the system that performs the core functionality is referred to as a Participant. The Participants run Helix library that invokes callbacks whenever the controller initiates a state transition on the participant. The distributed system is responsible for implementing those callbacks. In this way, Helix remains agnostic of the semantics of a state transition, or how to actually implement the transition. For example, in a LeaderStandby model, the Participant implements methods OnBecomeLeaderFromStandby and OnBecomeStandbyFromLeader. In the above figure p1, p2 represent the partitions of a Resource (Database, Index, Task). Different colors represent the state (e.g master/slave) of the partition.

Spectator: This component represents the external entities that need to observe the state of the system. A spectator is notified of the state changes in the system, so that they can interact with the appropriate participant. For example, a routing/proxy component (a Spectator) can be notified when the routing table has changed, such as when a cluster is expanded or a node fails.

Interaction between components

What are the core requirements of a cluster management system?

- Cluster state/metadata store: Need a durable/persistent and fault tolerant store

- Notification when cluster state changes: the controller needs to detect when nodes start/fail and compute appropriate transitions required to bring the cluster to a healthy state.

- Communication channel between the components: when the controller chooses state transitions to execute, it must reliably communicate these transitions to participants. Once complete, the participants must communicate their status back to the controller.

Helix relies on Zookeeper to meet all of these requirements. By storing all the metadata/cluster state in Zookeeper, the controller itself is stateless and easy to replace on failure. Zookeeper also provides the notification mechanism when nodes start/stop. We also leverage Zookeeper to construct a reliable communication channel between controller and participants. The channel is modeled as a queue in Zookeeper and the controller and participants act as producers and consumers on this queue.

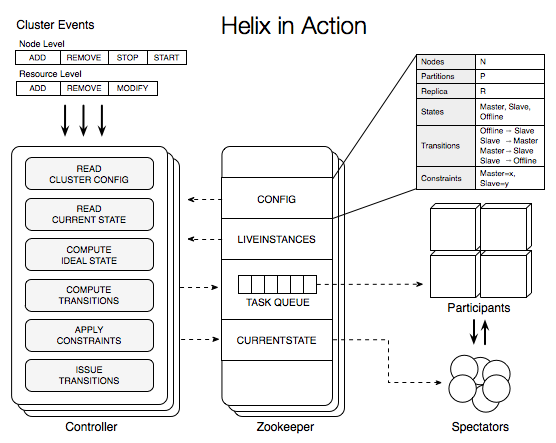

Helix in Action

Thus far we have described how distributed system’s declare their behavior within Helix. Helix execution is a matter of continually monitoring Participant’s state and, as necessary, ordering transitions on the Participants. There are a variety of changes that can occur within a system, both planned and unplanned: starting/stopping of Participants, adding or losing nodes, adding or deleting resources, adding or deleting partitions, among others.

The most crucial feature of the Helix execution algorithm is that it is identical across all of these changes and across all distributed systems! Else, we face a great deal of complexity trying to manage so many combinations of changes and systems, and lose much of Helix’s generality. Let us now step through the Helix execution algorithm.

On every change in the cluster, controller executes a fixed set of actions referred to as rebalance pipeline. This pipeline consists of the following stages that are executed sequentially

- Read cluster configuration: Gathers all metadata about the system such as number of nodes and resources. For each resource, reads the number of partitions, replicas and the FSM (states and transitions) along with the system constraints such as Master:1, Slave:2.

- Read current state: In this stage, the controller reads the current state of the system. Current state reflects the state of each Participants as a result of acting on the transitions issued by the controller.

- Compute Idealstate: After collecting the required information: current state and system configuration, the core rebalancer component of Helix computes the new idealstate satisfies the constraint. One can think of this as the constraint solver. Helix allows application to plugin their own custom rebalancing strategy/algorithm. Helix comes with a default implementation that computes the idealstate that satisfies the constraints and achieves the following objectives.

- Evenly distribute the load among all the nodes.

- When a failure occurs, redistribute the load from failed nodes among remaining nodes while satisfying (1)

- When a new node is added, move resource from existing nodes to new nodes while satisfying (1)

- While satisfying (1), (2), (3) minimize the transitions required to reach idealstate from the currentstate

- Compute transitions: Once the idealstate is computed, Helix looks up the state machine and computes the transitions required to take the system from its current state to idealstate.

- Apply constraints: Blindly issuing all the transitions computed in the previous step might result in violating constraints. Helix computes the subset of the transitions such that executing them in any order will not violate the system constraint.

- Issue transitions: This is the last step in the pipeline where the transitions are sent to the Participants. On receiving the transition, the Helix agent that is embedded in the Participant invokes the appropriate transition handlers provided by the Participant

Once the transition is completed, the success/failure is reflected in the current state. The controller reads the current state and re-runs the rebalancer pipeline until the currentstate converges to idealstate. Once the currentstate is equal to idealstate, the system is considered to be in a stable state. Having this simple goal of computing the idealstate and issuing appropriate transition such that currentstate converge to idealstate makes Helix controller generic.

Up next: Will pick one distributed system (distributed data store, pub/sub, indexing systems) and describe how one can design and build it using Helix.

Have additional questions contact us at IRC #apachehelix or use our mailing list http://helix.incubator.apache.org/mail-lists.html. More info at http://helix.incubator.apache.org